Imagine you’re standing on a bridge watching two rivers flow below. At first glance, they seem identical both wide, both calm. But when you look closely, you notice subtle variations in the currents: one flows faster near the banks, while the other has a stronger pull in the middle. In the world of predictive modelling, these rivers represent two distributions one for positive outcomes and one for negatives. The Kolmogorov-Smirnov (K-S) statistic is like a careful hydrologist, quantifying how far apart these two currents really are.

For data professionals, this metric serves as a diagnostic compass, indicating whether a model is truly discriminating between classes or merely drifting. It’s not just about knowing the average behaviour of data it’s about identifying the distance between truth and noise. Understanding this concept is central to those sharpening their analytical instincts through a Data Analyst course in Delhi, where such nuances can make or break a model’s credibility.

The Art of Separation: What the K-S Statistic Really Measures

Picture two groups of marathon runners one representing customers who will buy your product (positives), the other those who won’t (negatives). Both start at the same line, running at different paces based on their predicted probabilities from your model. The Kolmogorov-Smirnov statistic measures the maximum distance between the cumulative distributions of these two groups along the race track.

In simpler terms, it asks: At what point in the probability range do these two groups differ the most? The higher that difference, the better your model can tell them apart. A K-S value close to 1 (or 100%) indicates a sharp separation, while a value near zero signals overlap your model is confusing buyers for non-buyers.

This perspective is powerful because it reflects discrimination rather than accuracy. It doesn’t care about how many predictions are correct overall; instead, it focuses on whether your model recognises the inherent difference between two populations.

Why Analysts Treasure It: Beyond Accuracy Metrics

Accuracy may feel satisfying, but it can be deceiving like judging a student’s skill solely by attendance rather than performance. The K-S test looks beyond such superficial scores. It helps identify the heart of predictive power how well a model distinguishes between outcomes.

For instance, in credit scoring, the K-S value indicates whether a model can meaningfully distinguish between good and bad borrowers. A higher K-S score signifies greater confidence that the system isn’t arbitrarily assigning scores. Similarly, marketers use it to separate responsive customers from indifferent ones, ensuring that campaigns reach those who matter most.

Those pursuing a Data Analyst course in Delhi soon learn that metrics like K-S are not optional extras they’re fundamental in industries where misclassification can mean millions in losses. The metric adds interpretability to performance evaluation, transforming abstract probabilities into concrete, business-ready insights.

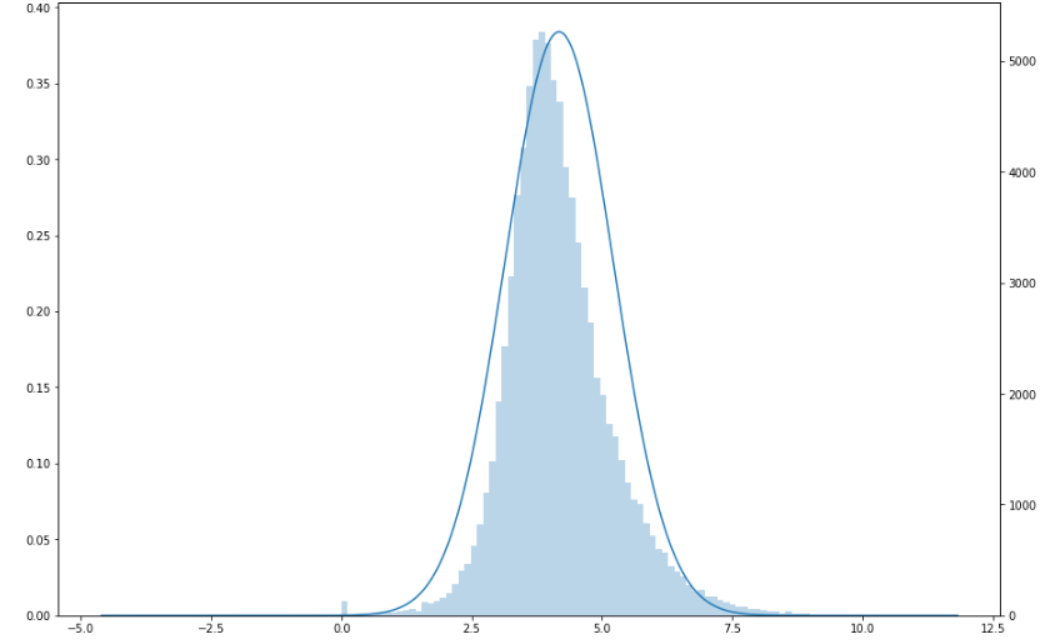

The Curve of Truth: Visualising Distributions

One of the most intuitive aspects of the Kolmogorov-Smirnov statistic lies in its visual appeal. If you plot the cumulative distribution functions (CDFs) for both positives and negatives, the K-S statistic represents the maximum vertical gap between these two curves. That gap like the widest distance between the banks of two diverging rivers tells you where your model achieves its best differentiation.

This visual is more than just a chart. It’s a story of how predictions align or fail to align with reality. When the curves are far apart, the model clearly separates the two classes. When they overlap, it’s a red flag: the model struggles to distinguish signal from noise. Analysts often examine this visual before even computing the numerical score because it captures the soul of the statistic the separation that defines model performance.

Applications Across Industries: From Finance to Fraud Detection

The K-S test has found a home in sectors where risk and precision are inseparable. Banks rely on it to evaluate credit risk models, determining whether borrowers likely to default are clearly distinguishable from reliable payers. E-commerce companies use it to identify fraudulent transactions spotting the point at which genuine and suspicious activities diverge most clearly.

In healthcare, predictive models using K-S help identify patients at higher risk of complications, ensuring timely intervention. In insurance, it assists in risk segmentation, aligning policy pricing with actual likelihoods rather than assumptions. The common thread across these applications is trust trust in data-driven decisions grounded in rigorous validation.

A Subtle Strength: Why K-S Endures Amid Modern Metrics

With newer metrics like AUC-ROC and F1-scores dominating discussions, the K-S statistic remains a quiet powerhouse. What makes it special is its simplicity and interpretability. It’s not just another number it’s a measure of separation that aligns naturally with business sense. You can tell stakeholders, “At this probability threshold, we achieve maximum distinction between good and bad outcomes,” and they’ll immediately understand the implication.

In practice, analysts often use K-S alongside AUC to form a comprehensive view of model performance. While AUC provides an overall picture of discrimination across thresholds, K-S statistics pinpoint where that discrimination peaks. It’s a tactical lens within a strategic framework both necessary for mastering predictive modelling.

Conclusion

In the grand orchestra of statistical metrics, the Kolmogorov-Smirnov statistic plays the role of the conductor bringing clarity and harmony to the chaos of predictions. It doesn’t just assess performance; it reveals the model’s soul, showing how well it separates the possible from the improbable. For professionals venturing into analytics, mastering these metrics transforms raw numbers into stories of insight, risk, and opportunity.

Much like the two rivers that started our journey, understanding where paths diverge tells us everything about where we’re headed. And for learners honing these analytical instincts through structured learning perhaps through a Data Analyst course in Delhi grasping tools like the K-S statistic is what bridges the gap between curiosity and competence, between data and decision.